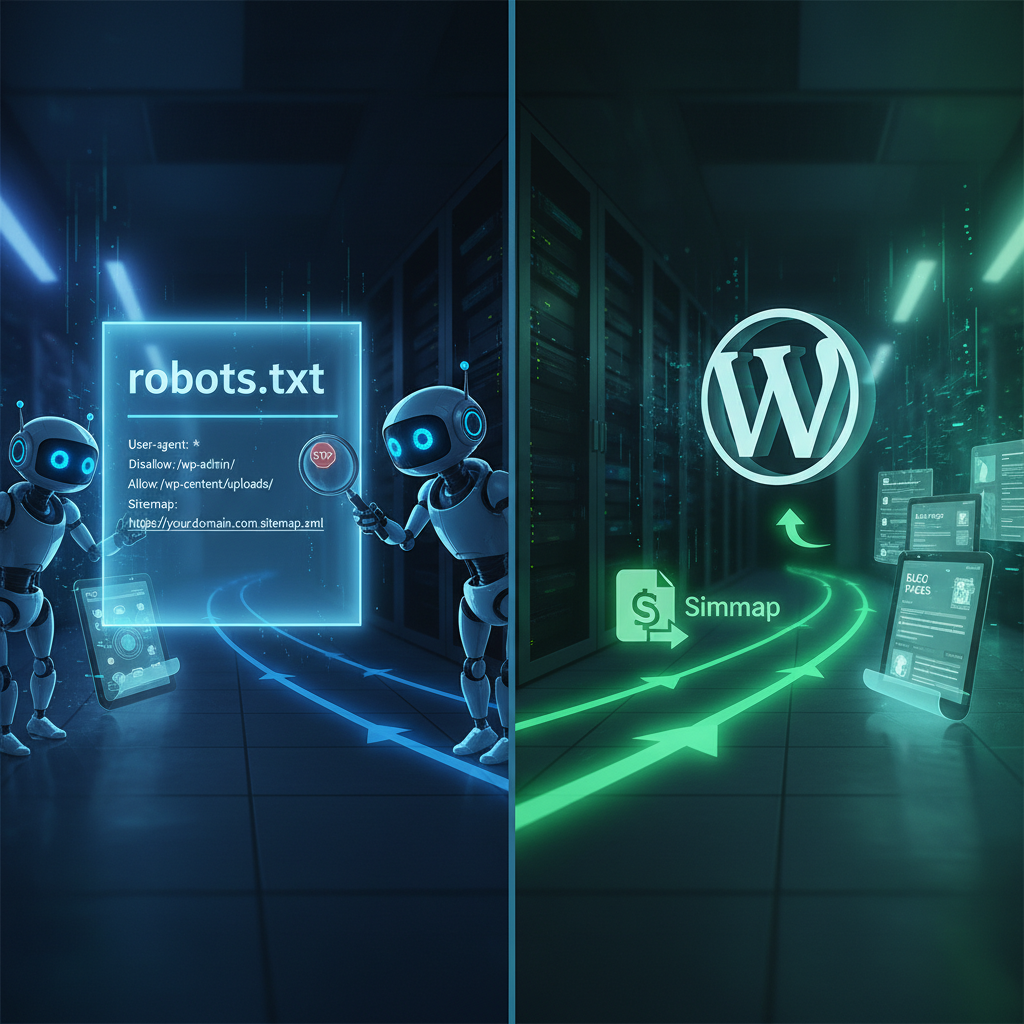

WordPress is a widely used platform for creating websites, offering extensive flexibility and control over site management. A critical component in managing how search engines interact with your WordPress site is the Robots.txt file. This file serves as a guideline for search engine crawlers, directing them on how to index the site’s content.

The Robots.txt file in WordPress is pivotal in dictating search engine behavior. It gives site owners the ability to control which parts of their website are accessed by web crawlers, thus influencing site indexing and visibility. By defining clear access permissions, webmasters can optimize the way their content is presented in search engine results.

Using Robots.txt within WordPress allows for effective management of content visibility and accessibility. Site owners can guide search engines to focus on valuable content while restricting access to certain directories that might contain sensitive or redundant information. This control is fundamental in improving the site’s performance and optimization practices. Moreover, by strategically permitting and denying access through Robots.txt, site managers can enhance the site’s resource accessibility and visibility.

This file’s role transcends simple SEO practices, providing a strategic layer in the overall development and management strategy of a WordPress site. Proper configuration of the Robots.txt file ensures that search engines accurately index the site’s content, resulting in improved site management and a more robust online presence.

File Location

The “robots.txt” file plays a crucial role in managing how search engines interact with a website, particularly within the context of WordPress sites. This file is located within the core directory of a WordPress installation and serves as a communication tool with search engines, providing directives on which areas of the website should be crawled or not. This aspect of controlling crawler access is integral to maintaining efficient and organized website management.

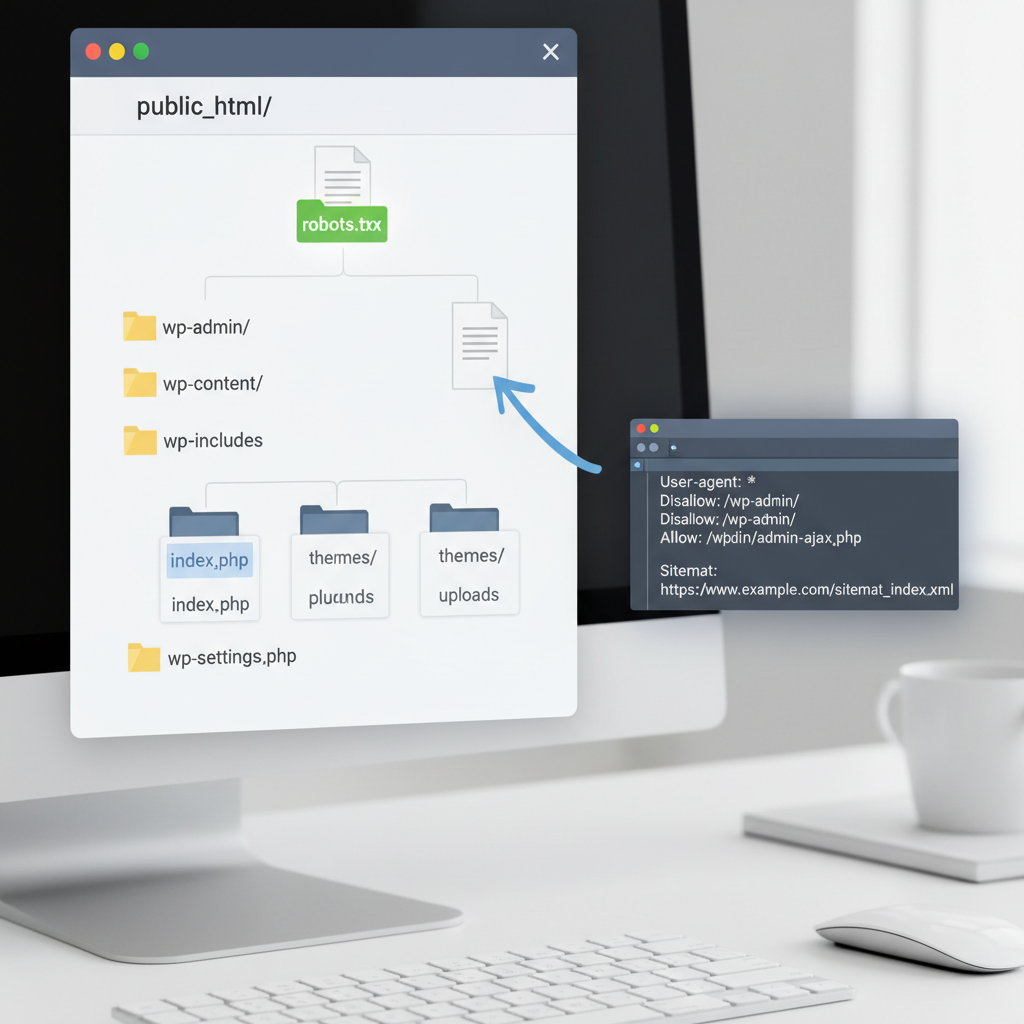

To locate the “robots.txt” file on a WordPress site, you will typically find it in the root directory of your WordPress installation. Here’s a step-by-step guide to accessing it:

-

Access the WordPress Directory: Use an FTP client or your hosting provider’s file manager to navigate to the root directory of your WordPress site. This is commonly found under the ‘public_html’ or similar directory in your server file structure.

-

Find the “robots.txt” File: In the root directory, look for the “robots.txt” file. If it does not exist, you may need to create it manually. In WordPress, sometimes this file isn’t physically present but is generated virtually via WordPress or a plugin.

-

Edit the File: You can open and edit the “robots.txt” file using a text editor within your file manager or through your FTP client. This allows you to configure how search engines interact with your site, providing you control over what content gets indexed and what remains hidden from search engine crawlers.

Understanding the placement and function of “robots.txt” ties back into broader best practices in WordPress site optimization and development. It underscores the importance of managing site structure effectively for search engine indexing and optimization. This file serves a pivotal role in ensuring that search engines index the most relevant parts of your website, contributing to more favorable search engine performance. By optimizing the “robots.txt”, WordPress administrators can significantly influence their site’s visibility and efficiency in search engine results, aligning with comprehensive website management principles.

Root Directory Access

Accessing the root directory of a WordPress site is an essential step for users wanting to manage their website’s file structure effectively. WordPress organizes its files systematically, with the root directory serving as the foundational layer where key configuration files like the robots.txt are housed. Recognizing the importance of this directory is crucial for optimizing site performance and enhancing search engine visibility.

To access the root directory, one must first understand its role in the overall WordPress file structure. The root directory, often referred to as the main directory, contains essential configuration files and directories that control how the website operates. Locating this directory is a straightforward process that can significantly impact website management.

Several methods are available for accessing the root directory. One common approach is through an FTP client. Tools like FileZilla can be utilized to securely connect to the website server, allowing users to navigate to the root directory. Once connected, users can find the robots.txt file among other critical files within the directory. It is important to adjust the robots.txt settings appropriately to improve search engine optimization and overall site management.

Alternatively, the root directory can be accessed through the hosting provider’s file manager. Most hosting platforms provide a user-friendly interface to manage the website’s files directly from the control panel. By navigating to the file manager, users can quickly locate the root directory and make necessary modifications to the robots.txt file, thereby enhancing their site’s configuration and SEO potential.

Understanding file management within the WordPress root directory is vital for users aiming to optimize their websites. By mastering these access techniques, users can better manage their WordPress sites, improve SEO outcomes, and enhance overall performance.

File Functionality

In the context of a WordPress website, file management plays a pivotal role in ensuring smooth operation and enhanced SEO performance. At the heart of this management system is the robots.txt file, a fundamental component that significantly influences how web crawlers interact with your site. This file is crucial in directing search engines, managing permissions, and optimizing web access.

The integration of robots.txt is particularly essential for SEO as it directly controls which parts of a WordPress site are accessible to crawlers. By specifying directives within this file, site administrators can guide web crawlers efficiently, thereby influencing the site’s visibility and ranking on search engines. The ability to configure robots.txt within WordPress allows for precise adjustments according to the site’s needs, enabling better optimization of content exposure and access.

In WordPress, the robots.txt file provides a streamlined process for specifying permissions and access directions. It uses simple directives that can prevent certain content from being indexed, thus managing the site’s public face more effectively. This file helps in defining a clear path for crawlers, ensuring that resource allocation, measured in metrics like bytes, is optimized for SEO purposes.

Understanding the functionality of robots.txt within a WordPress website is crucial for leveraging its configuration capabilities. This knowledge enables the integration of tactics that influence crawler behavior, thereby enhancing the indexing process. Optimal use of this file aligns with WordPress’s broader SEO strategies, balancing accessibility and privacy through precise configurations.

In conclusion, the robots.txt file in WordPress represents a powerful tool for administrators aiming to influence crawl behavior and optimize SEO outcomes. Its configuration options provide a robust framework for directing search engines, ensuring that web crawlers effectively understand and index a site’s content. As such, mastering its use is vital for maximizing a WordPress website’s performance in search engine rankings.

Crawl Instructions

In the WordPress environment, the robots.txt file serves as a pivotal tool for managing search engine crawling and site indexing. This file, located in the root directory of your WordPress website, functions by providing directives to search engine crawlers about which parts of your site can be accessed and which should remain private. Understanding and configuring this file is crucial to ensure that your WordPress site is optimized for search engine indexing and crawling, directly influencing your site’s visibility and SEO performance.

The robots.txt file holds importance as it allows you to exercise control over search engine access. By specifying instructions—such as “allow” or “disallow” commands—you determine which areas of your site search engines can index. This not only optimizes the crawling process but also safeguards sensitive or irrelevant parts of your site from unnecessary exposure.

Within the WordPress platform, configuring the robots.txt file is a straightforward process but requires careful consideration to align with your broader SEO strategy. For instance, you can use the file to disallow access to directories that don’t contribute to SEO value, such as admin pages, thereby focusing crawler attention on valuable content areas. This alignment ensures that your WordPress development practices support a strategic approach to SEO, emphasizing efficient indexing and optimized crawling paths.

For effective configuration, utilize keywords such as “WordPress robots.txt” and “optimized crawling,” integrating instructions like “crawl delay” where necessary to control the rate at which your content is accessed. These directives form part of a comprehensive approach to managing site accessibility, ensuring that your WordPress website remains visible and relevant in search engine results.

Additionally, the strategic use of the robots.txt file dovetails with WordPress SEO objectives by promoting intentional site organization and targeted content delivery. By maintaining a keen focus on controlling access and managing crawl directives, you reinforce your WordPress website’s role within the broader development initiative, maximizing SEO impact through precise content exposure and exclusion strategies. This nuanced approach supports a seamless integration of technical directives within the user-friendly framework of WordPress, thus enhancing overall site performance and search engine relationships.

Editing Methods

Editing the robots.txt file in WordPress is a crucial step for managing how search engines interact with your website. This file serves as a guide to search engine crawlers, instructing them on which pages to crawl and index. Editing methods within WordPress cater specifically to the customization and optimization needs of your site’s SEO strategy.

WordPress provides several strategies for editing the robots.txt file, seamlessly integrating these methods into the website management framework. One primary approach involves using WordPress plugins designed for SEO. Plugins like Yoast SEO or All in One SEO offer user-friendly interfaces to edit the robots.txt file directly from your WordPress dashboard. These tools often provide additional features that guide you in optimizing your file for better search engine interaction, making this method both practical and efficient.

Another method involves direct editing through the file manager or via FTP access to your WordPress site. This technique offers full control over your site’s directives. You would navigate to the root directory of your WordPress installation, locate the robots.txt file, and make necessary modifications using a text editor. This approach requires a bit more technical knowledge but ensures precision in customizing the file to suit specific SEO goals.

Effectively managing the robots.txt file impacts the site’s SEO performance by dictating the accessibility of content to search engines. Precise configurations can enhance indexing efficiency and ensure important pages are prioritized in search rankings. Properly utilizing these editing methods within WordPress not only integrates well with the site’s structure but also aligns with broader website management practices to promote an optimized digital presence.

Employing these strategies results in a well-configured WordPress site. By optimizing the management and deployment of the robots.txt file, site owners can influence search engine behaviors, enhancing both crawl efficiency and SEO outcomes. These methods contribute significantly to maintaining an effective and dynamic WordPress site, underscoring the importance of careful robots.txt management within the broader context of WordPress development.

Use of Plugins

In the landscape of WordPress development, plugins stand as indispensable tools that augment the basic functionalities of a site, transforming it into a robust and efficient platform. These plugins operate as software components integrated into the WordPress ecosystem, designed to extend its capabilities far beyond its foundational offering. From enhancing security to optimizing speed and boosting SEO performance, the utility of plugins is vast, catering to specific needs that facilitate a seamless user experience and efficient website management.

When considering which plugins to integrate, it’s crucial to align selection with the specific demands of the site. Whether it’s e-commerce, blogging, or a complex business website, the criteria for choosing plugins should include compatibility, user reviews, support, and updates. Plugins such as Yoast SEO for search engine optimization or WooCommerce for e-commerce can greatly enhance a site’s functionality, but care must be taken to ensure they are updated regularly. This not only optimizes performance but also guards against vulnerabilities that could compromise the site.

The integration of plugins is a strategic process that requires attention to configuration settings. Misconfigured plugins can lead to slow performance or conflicts, undermining the effectiveness of the entire website. Therefore, understanding the intricacies of each plugin’s setup is essential for maximizing its benefits.

Maintaining plugins is not merely about adding new functionalities but ensuring their continuous operation through timely updates. Regular updates secure the plugin against potential threats and also improve their efficiency, directly contributing to the site’s overall health and performance.

In conclusion, plugins are pivotal in enhancing the developmental strategies of a WordPress site. By adopting a meticulous approach to selection, configuration, and maintenance, these tools not only extend the inherent features of WordPress but also align with broader site optimization goals, cementing their role as vital components in a well-rounded web development strategy.

Manual Edits

Understanding and managing the robots.txt file in WordPress is essential for enhancing website performance and optimizing SEO. Manual edits in this context play a pivotal role in fine-tuning the accessibility of website content to search engines, thereby influencing indexing and visibility.

Robots.txt is a file that guides search engine crawlers on which parts of the site they can index, which is crucial for SEO strategies. This file is typically located in the root directory of a WordPress installation, which can be accessed via your website’s file manager or FTP client.

The primary advantage of manual edits to the robots.txt file is the ability to customize crawler instructions, which enhances website efficiency and SEO performance. By configuring which pages or directories are accessible to crawlers, you can prevent duplicate content issues and ensure that important pages are indexed, improving search engine rankings.

To perform manual edits on the robots.txt file, first, access your WordPress site’s file structure. Navigate to the root directory where the robots.txt file resides. Open this file in a text editor to either modify existing entries or add new directives. Common directives include “Disallow” for restricting access to certain paths and “Allow” for enabling access.

Since search engine algorithms constantly evolve, maintain regular updates and reviews of your robots.txt file to align with best SEO practices. However, be cautious as improper configurations can lead to essential pages being excluded from search engine indexes, adversely affecting visibility. Therefore, understanding the specific requirements of your WordPress site and applying careful modifications is critical.

These strategic manual edits not only refine site accessibility but also enhance the site’s indexing quality, thereby contributing positively to the overall WordPress development landscape, ensuring a well-optimized and high-performing website.

SEO Importance

In the realm of WordPress websites, SEO plays a pivotal role in making sure that these platforms are not only visible but also rank competitively in search engine results. A key component aiding this process is the robots.txt file. Situated on your server, the robots.txt file resides as an essential facet of the SEO toolkit, specifically for WordPress users, guiding search engines on which pages to crawl and index.

Proper configuration of SEO elements, such as the robots.txt file, significantly impacts site indexing efficiency. By controlling crawler access, this file directly influences the ease with which search engines navigate your website, thereby optimizing visibility and reach. This systematic management ensures that essential pages are indexed while less crucial parts are omitted, leveraging search engine algorithms to improve rankings.

This strategic use of robots.txt within WordPress extends beyond just orchestrating crawl paths. It enables the refinement of site indexing, ensuring that web pages appear in search results where they are most relevant. SEO configurations like these serve to enhance web visibility, a crucial aim for any website seeking to expand its online footprint.

In sum, the relationship between WordPress sites and SEO is both dynamic and essential, with robots.txt serving as a vital instrument in achieving optimal indexing and ranking. Through strategic rule-setting within this file, web administrators are empowered to guide crawler behavior, thus influencing the broader SEO goals of discoverability and heightened performance in search engine results. This process helps to structure the pathways for web crawlers, optimizing site architecture for both user engagement and algorithmic evaluation.

Ranking Influence

Within a WordPress website, ranking influence chiefly revolves around how search engines interpret and index the site’s content. One vital component in this dynamic is the robots.txt file, which plays a critical role in directing search engine crawlers on how to interact with the site. Positioning the robots.txt file effectively allows website owners to control crawler activity, significantly impacting site indexing and visibility on search engine results pages (SERPs).

The robots.txt file, residing in the root directory of a WordPress installation, is a simple text file that provides directives to search engine bots about which pages or files they can or cannot request from the website. By strategically configuring this file, site owners can manage which portions of a website are indexed, thereby influencing how a site appears in search results. Proper management of robots.txt can enhance a site’s discoverability by ensuring that only the most relevant and strategic content is accessible to crawlers, optimizing overall SEO performance for WordPress sites.

For WordPress users, configuring the robots.txt file can involve allowing full crawl access to high-value pages while restricting access to duplicate or low-value pages that could harm ranking. Doing so not only adds clarity to how a site should be traversed by bots but also aids in creating an efficient, cost-effective crawl path, which is essential for speed and performance in search results.