Robots.txt files play a crucial role in managing search engine accessibility on a WordPress website. Within the context of WordPress website development, these files serve as directives that communicate which parts of a site should be accessible or hidden from search engine crawlers. This control is integral to forming a coherent SEO strategy and effectively managing site indexing by search engines.

A WordPress website employs a Robots.txt file as a pivotal tool in configuring how web crawlers from search engines interact with the site. This configuration involves specifying which pages or sections are open for crawling and which are off-limits, thereby influencing the scope and focus of site indexing. The strategic management of this file can significantly affect the indexing outcome and, subsequently, the visibility of the site on search engines.

The unique architecture of a WordPress website interacts with the Robots.txt file in specific ways that are particularly relevant to SEO optimization. Within the framework of WordPress website development, the use of these files can direct crawlers efficiently, ensuring that only the most important content is prioritized for indexing. This prioritization enhances the overall SEO strategy by directing crawlers toward content that aligns with the site’s targeted user queries.

Configuring a Robots.txt file is fundamentally about managing search engine accessibility and optimizing site architecture to maximize exposure in search results. By precisely defining crawl directives, a WordPress website can manage crawler actions to either promote priority content or control indexing costs by excluding irrelevant sections. This not only optimizes the site’s SEO strategy but also improves site architecture by ensuring that search engine crawlers focus on sections that contribute to the site’s overarching objectives and performance criteria.

The ability of a WordPress site to influence search engines, direct crawlers, and enhance indexing through strategic configuration of its Robots.txt file is a testament to its importance in the ongoing development and optimization of WordPress websites. This foundational understanding sets the stage for deeper explorations into the nuances of site management and SEO within the WordPress environment.

File Location

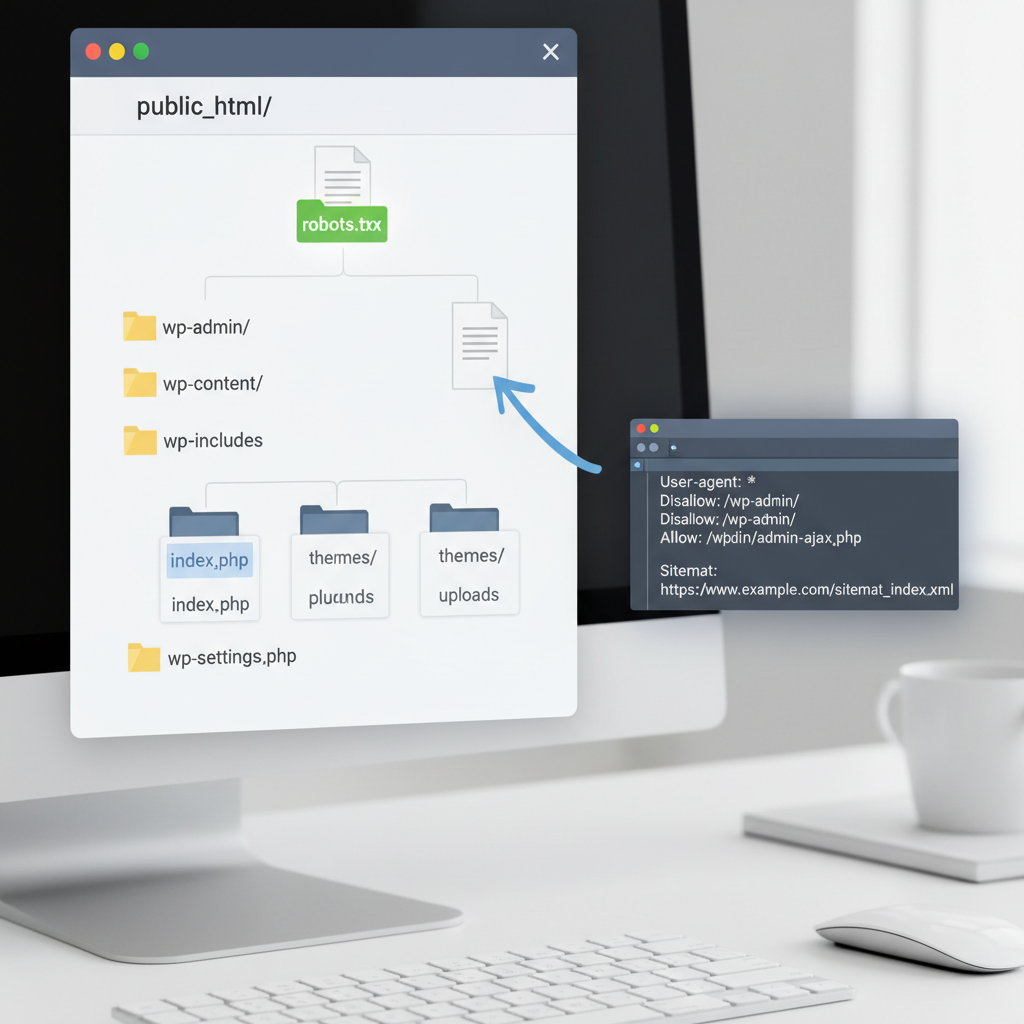

In a WordPress website, the robots.txt file is situated in the root directory of the installation. This location is pivotal as it directly influences the behavior of search engine crawlers, guiding them on which parts of the site to visit or avoid. Understanding the default directory path helps maintain optimal access control, crucial for managing search engine indexing protocols effectively.

The robots.txt file is typically found at the base of your WordPress installation path. For standard setups, accessing this directory involves navigating to the root of your WordPress files, where the wp-admin and wp-content folders reside. This placement ensures that web crawlers from search engines like Google can locate the file immediately, allowing them to adhere to the specified crawling directives. Effective placement of the robots.txt file is essential not only for adherence to SEO best practices but also for seamless integration with site architecture protocols.

In customized WordPress setups, where directory structures might differ from the norm, special attention is needed to ensure the robots.txt file remains easily accessible in the root directory. Failures in maintaining this location can lead to indexing issues and unexplored pages, hampering overall visibility and SEO performance.

To verify the presence and accuracy of your robots.txt file, navigate to your website’s main URL and append /robots.txt. This simple check confirms the file’s availability and correctness, ensuring that appropriate directives are communicated to search engines. Through these directives, efficiency in controlling search engine behavior and optimizing site visibility is achieved.

The integration of the robots.txt file into the broader WordPress structure signifies its crucial role in access control and site management. Ensuring its correct placement holds the key to leveraging search engine indexing for improved SEO outcomes, reinforcing its position as a fundamental element in the WordPress website development process.

/robots.txt in Root Directory

The robots.txt file is a simple yet powerful tool used in a WordPress website’s root directory to manage the behavior of search engine crawlers. Despite its simplicity, this file has profound implications for a site’s visibility and search engine ranking.

In the context of WordPress, the robots.txt file serves as a set of guidelines for search engine bots. By configuring this file, site administrators can control which parts of their website can be accessed by crawlers. This control is critical in optimizing not only the crawl rate but also in shaping the SEO strategy specific to WordPress installations.

Understanding the interaction between the robots.txt file and WordPress systems is essential for any webmaster. Initially, WordPress may generate a default robots.txt if one is not manually created, often allowing all bots unrestricted access. However, customization of this file is often necessary to refine search engine access. For instance, restricting crawlers from accessing certain directories like /wp-admin/ ensures that sensitive areas are secured, reducing unnecessary crawl load and focusing search engines on more valuable areas of the site, such as content-rich pages.

To optimize a WordPress site’s SEO, it is crucial to follow best practices for robots.txt configuration. The syntax and structure of directives in this file should reflect the site’s unique theme and plugin environment. Key directives include “Disallow” to restrict access to specific paths and “Allow” to override broader “Disallow” statements where necessary. Proper configuration ensures efficient crawling and indexing, enhancing site discoverability without compromising content security.

Applying these practices effectively enables site owners to leverage the robots.txt file as a strategic tool, improving control over site visibility and ranking potential. From managing crawler directives to implementing SEO optimization tailored to WordPress, the thoughtful configuration of robots.txt can significantly influence a site’s performance in search results.

When configuring the file, it’s crucial to consider interdependencies with plugins and themes, as these elements can introduce additional directories or influence crawler behavior. Therefore, maintaining an updated and well-structured robots.txt file is vital to ensuring that a WordPress site remains competitive in search engine results. This file acts as a mediator between the WordPress core functionalities and search engines, reinforcing an efficient and effective indexing strategy.

Editing Methods

To successfully manage and enhance how search engines interact with a WordPress site, understanding the editing methods for the robots.txt file is essential. This file serves as a central tool in directing search engine crawlers, thereby playing a significant role in WordPress SEO efforts.

The robots.txt file in WordPress is pivotal for controlling the indexing and crawling directives specific to the site. This ensures a streamlined and efficient search engine interaction right from the initial evaluation. For beginners, accessing the robots.txt file via user-friendly plugins is often the most straightforward approach. Plugins such as Yoast SEO offer intuitive interfaces to modify the robots.txt settings without delving into complex coding or server configurations.

Advanced users may prefer a more hands-on method by editing the robots.txt file directly through server access or incorporating it into theme files. Each method carries its own advantages and limitations. For instance, using plugins might restrict customization but reduces the risk of server errors, while direct file editing provides comprehensive control but requires a deeper understanding of server management and WordPress architecture.

Carefully considering the implications of each editing choice is crucial. How the robots.txt file is modified can substantially impact the crawl budget, influence indexing rules, and establish crawl directives to optimize site performance in search engines. Establishing clear, effective crawl directives enhances search engine visibility and ensures optimal content indexing.

To ensure an effective configuration, adhere to best practices in robots.txt management. These include creating directives that support site navigation, minimize crawl paths, and maintain a balanced visibility of site resources to search engines. By strategizing the management of this file, WordPress site owners can significantly enhance their SEO strategies, aligning technical configurations with overall site optimization goals.

Using Plugins

In the realm of WordPress website development, managing the location of the “robots.txt” file via plugins is a pivotal component of an SEO-enhanced strategy. The manipulation of this file directly influences how search engines crawl and index a site, making effective control a necessity for boosting digital presence.

Plugins serve as essential tools in customizing and managing the “robots.txt” file location, enabling site administrators to optimize for better search engine performance without needing extensive technical expertise. There are specific categories of plugins designed to foster this customization and control, ensuring they align seamlessly with the site’s SEO goals.

These plugins facilitate the setup and maintenance of the “robots.txt” file by providing user-friendly interfaces that manage settings efficiently. They help administrators craft file directives that prevent irrelevant or less important pages from being indexed while promoting crucial content. Compatibility with various WordPress versions ensures that these plugins remain reliable tools across different platform updates, enhancing their utility and lifecycle.

The use of plugins in this context underscores their role in extending WordPress functionality, adding layers of flexibility and automation to digital asset management. By choosing the right plugins, website performance is bolstered through improved site indexing and crawling management.

Conclusively, leveraging plugins for “robots.txt” file management yields significant benefits such as enhanced site visibility and SEO performance. However, users must cautiously select and configure plugins to avoid potential pitfalls that may arise from incorrect settings, ensuring that search engines effectively interpret the website’s intended structure and content visibility.

Via FTP

FTP access plays a crucial role in managing a WordPress website, especially when dealing with the “robots.txt” file, which is integral to site functionality and SEO. This section focuses on guiding users through the process of accessing WordPress files using FTP.

To begin, you’ll need an FTP client like FileZilla to securely connect to your WordPress site. Launch the FTP client and enter your site’s FTP credentials, which typically include the hostname, username, password, and port number. These credentials are necessary to access your website’s directory files securely.

Once connected, navigate to the root directory of your WordPress installation. This is usually where the “wp-content,” “wp-admin,” and “wp-includes” folders reside. Locate the “robots.txt” file within this directory. If it doesn’t exist, you can create a new text file and save it as “robots.txt.”

Modifying the “robots.txt” file can have significant implications for your website’s SEO. This file communicates with search engines about which pages of your site should be crawled or indexed. For WordPress site management, ensuring correct “robots.txt” settings is paramount; it governs the visibility of your pages on search engines, thereby directly influencing site traffic and indexing.

After making changes to “robots.txt,” save and upload the modified file back to the root directory using the FTP client. Always verify the changes with a tool like Google Search Console to ensure search engines interpret your directives correctly.

In conclusion, FTP access to manage the “robots.txt” file extends beyond simple file transfers; it’s a pivotal step in WordPress website management that impacts your site’s performance and searchability. Understanding this process is a fundamental aspect of WordPress development, linking directly to broader SEO strategies. By manipulating the “robots.txt” file effectively, you contribute strategically towards the site’s overall search engine optimization goals.

Configuration Uses

The robots.txt file plays a crucial role in WordPress site management by directing how search engines interact with the site. Proper configuration of this file allows website administrators to manage, control, and optimize how crawlers access various sections of a WordPress website. By utilizing specific settings within the robots.txt file, administrators can significantly influence the site’s visibility on search engines, enhancing overall site management and performance.

One of the primary functions of the robots.txt file is to tell search engine crawlers which pages on a WordPress site should be accessed and indexed. By configuring commands within this file, administrators can effectively optimize which content appears in search engine results, prioritizing valuable pages while restricting access to those that are not essential. This selective visibility is essential for focusing search engines on the most relevant parts of the site, thereby improving search engine optimization (SEO).

Practical considerations for setting up the robots.txt file in WordPress include understanding which sections need to be restricted from crawlers. For instance, directories that include admin panels or scripts, which hold lower relevance for potential visitors, can be marked with “Disallow” commands. Such configurations not only streamline the crawl paths, reducing server load but also enhance the relevancy of indexed pages. This strategic use of robots.txt ensures that a WordPress site remains efficient and effective in resource allocation.

A well-maintained robots.txt file also enhances the management of site resources. By specifying which areas of the site search engines can access, administrators can optimize server performance. This careful management prevents unnecessary loads on the server which might arise from frequent crawler visits to unimportant areas.

Furthermore, the proper use of robots.txt in a WordPress setting contributes to a site’s historical data creation, playing a part in initial and ongoing evaluation by search algorithms. When these configurations align with site content and SEO strategy, they can significantly elevate the site’s search engine rankings and visibility, fostering a robust digital presence within the competitive online environment.

Allowing Crawlers

Understanding the placement and strategic management of the robots.txt file is crucial for enhancing the SEO capabilities of a WordPress website. In the WordPress ecosystem, robots.txt serves as a gatekeeper, determining which parts of a website search engine crawlers can access. Its correct placement and setup within the WordPress directory can significantly influence a site’s visibility and ranking.

The robots.txt file acts as a guide for crawlers, dictating which areas of a WordPress site they can explore and index. By configuring the file effectively, you can optimize the crawl budget and enhance site indexing efficiency. The file’s strategic position within the WordPress directory ensures that relevant content is accessible to search engines while restricting access to less critical areas, safeguarding resources and improving load times.

Managing crawler permissions through a well-configured robots.txt file is integral to a comprehensive WordPress SEO strategy. This management not only streamlines the indexing process but also aligns with broader goals of improving site structure and optimizing SEO efforts. Proper configuration aids in controlling search engine behavior, ensuring that crucial content receives the attention it needs to rank well.

By consistently reviewing and updating the robots.txt file, WordPress site managers can adapt to evolving SEO strategies and ensure the ongoing relevance and clarity of their site’s content to both users and search engines alike.

Disallowing Sensitive Paths

In the realm of WordPress development, managing sensitive paths is crucial to maintaining a website’s security and performance. Within WordPress, sensitive paths refer to private or sensitive areas of a site that contain crucial data or functionalities that should not be accessible to search engine crawlers or the general public. Ensuring these paths are properly managed involves utilizing the robots.txt file, which plays a vital role in controlling the indexing behavior of search engines.

The robots.txt file is a simple yet powerful tool that instructs search engine bots on which parts of a website should or should not be indexed. By configuring the robots.txt file to disallow sensitive paths, WordPress site administrators can prevent unauthorized access to non-public directories. For example, paths like /wp-admin/ or /wp-includes/, which are essential to the functioning of WordPress but should remain private, can be restricted from crawlers. This not only contributes to enhanced website security but also reduces the server load by limiting unnecessary crawling, thus optimizing site performance.

Implementing such restrictions in the robots.txt file helps establish a clear communication channel with search engine crawlers regarding which sections of the website should be indexed. Proper placement of this file within the WordPress directory structure is crucial. It should reside in the root directory to ensure it is recognized by search engines. This strategic configuration aids in maintaining an orderly and efficient indexing process, ultimately improving the website’s overall performance and security.

Beyond security implications, managing sensitive paths through the robots.txt file is part and parcel of a holistic approach to WordPress website development. It aligns with the best practices for continuous site optimization, emphasizing the importance of safeguarding user privacy and efficiently controlling access to different site sections. As developers and administrators strive for continuous improvement, integrating these methods is integral to upholding a robust and secure WordPress environment.

SEO Considerations

When developing a WordPress website, understanding the role of the robots.txt file is crucial for optimizing SEO. This file is integral in managing how search engines access and index site content, making it a vital component in WordPress installations. Its primary function is to control which areas of the website search bots can access, directly impacting the site’s search visibility.

In the context of WordPress, robots.txt is typically located in the root directory, alongside other core files. This placement is strategic, as it allows the file to serve as a gateway for search engine crawlers, defining permissions for what the bots can and cannot index. Proper configuration of this file is essential; it helps ensure that critical pages are accessible to search engines while sensitive areas remain private. This balance is key in refining the SEO strategy specific to WordPress sites.

By configuring the robots.txt file properly, website owners can define which parts of the WordPress directory structure should be visible in search results. This control involves actions such as “allow” or “disallow” access to specific directories. For instance, blocking access to unnecessary or duplicate content prevents negative impacts on the SEO performance. This thoughtful configuration enhances the site’s SEO impact by focusing crawler efforts on high-value pages.

Maintaining a well-configured robots.txt file ensures that search engines index only the relevant parts of a WordPress site. This direct influence over search engine access not only enhances site security but also optimizes the indexing process, which is crucial for boosting the site’s visibility and SEO efficacy. In sum, the robots.txt file plays a pivotal role in shaping a WordPress website’s presence online, making its correct configuration a cornerstone of effective SEO practice.