Understanding the Robots.txt File

The robots.txt file is a text file that is used to instruct search engine crawlers on which pages or sections of a website should be crawled or not crawled. It is an important tool for controlling the access of search engine bots to your website, and it plays a crucial role in search engine optimization (SEO). By using the robots.txt file, webmasters can prevent certain pages from being indexed, which can help to improve the overall visibility and ranking of a website on search engine results pages.

Importance of Editing the Robots.txt File in WordPress

WordPress is one of the most popular content management systems (CMS) for building websites, and it is important for web developers and SEO professionals to understand how to edit the robots.txt file in WordPress. By editing the robots.txt file, website owners can ensure that search engine crawlers are able to access and index the most important pages of their website, while also preventing them from crawling and indexing irrelevant or duplicate content. This can have a significant impact on the overall SEO performance of a website, and can help to improve its visibility and ranking on search engines.

Optimizing the Robots.txt File for SEO

When it comes to editing the robots.txt file in WordPress, there are several key considerations that web developers and SEO professionals should keep in mind. Firstly, it is important to ensure that the robots.txt file is properly configured to allow search engine crawlers to access the most important pages of the website, such as the homepage, product pages, and blog posts. At the same time, it is also important to prevent search engine bots from crawling and indexing irrelevant or duplicate content, such as admin pages, login pages, and search result pages.

Best Practices for Editing the Robots.txt File in WordPress

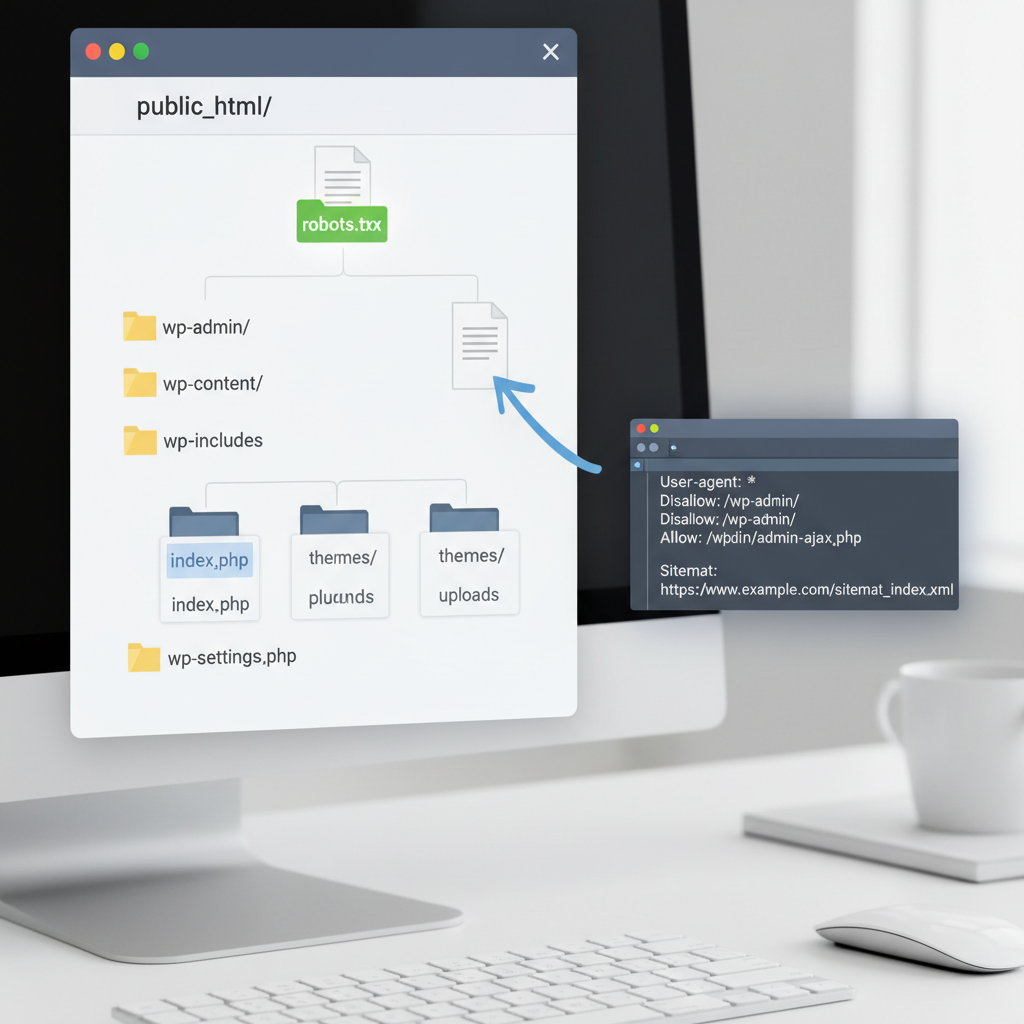

In order to optimize the robots.txt file for SEO, there are several best practices that web developers and SEO professionals should follow. This includes using the “Disallow” directive to prevent search engine crawlers from accessing specific pages or directories, using the “Allow” directive to override any “Disallow” directives, and using the “Sitemap” directive to specify the location of the website’s XML sitemap. It is also important to regularly review and update the robots.txt file to ensure that it is properly configured to meet the changing needs of the website and its SEO strategy.

Conclusion

In conclusion, editing the robots.txt file in WordPress is an important aspect of website optimization and SEO. By properly configuring the robots.txt file, web developers and SEO professionals can control the access of search engine crawlers to their website, and ensure that the most important pages are being crawled and indexed. This can have a significant impact on the overall visibility and ranking of a website on search engines, and is an essential tool for any website owner looking to improve their SEO performance.

Accessing the robots.txt file in WordPress

As a web developer, it’s important to have a good understanding of how to access and edit the robots.txt file in WordPress. This file plays a crucial role in controlling how search engines crawl and index your website, so being able to access it is essential for optimizing your site’s visibility.

Accessing the robots.txt file through the WordPress dashboard

If you prefer to access the robots.txt file through the WordPress dashboard, you can do so by installing a plugin such as “Yoast SEO” or “All in One SEO Pack.” These plugins provide a user-friendly interface for editing the robots.txt file without needing to access it directly via FTP.

Accessing the robots.txt file via FTP

If you prefer to access the robots.txt file via FTP, you can do so by connecting to your website’s server using an FTP client such as FileZilla or Cyberduck. Once connected, navigate to the root directory of your WordPress installation to locate the robots.txt file.

Locating the robots.txt file

The robots.txt file is typically located in the root directory of your WordPress installation. If you’re accessing it through the WordPress dashboard, you can find it by navigating to “SEO” > “Tools” > “File Editor” (if using Yoast SEO) or “All in One SEO” > “Feature Manager” > “Robots.txt” (if using All in One SEO Pack).

Making the robots.txt file accessible for editing

If you’re accessing the robots.txt file via FTP, you may need to adjust the file permissions to make it accessible for editing. This can typically be done by right-clicking on the file, selecting “File Permissions” or “Chmod,” and adjusting the permissions to allow for editing.

Editing the robots.txt file

Once you’ve located and made the robots.txt file accessible for editing, you can open it using a text editor such as Notepad or Sublime Text. From here, you can make any necessary changes to the file, such as adding directives to allow or disallow specific search engine bots from crawling certain parts of your website.

Editing the robots.txt file

When it comes to controlling the behavior of search engine crawlers on your website, the robots.txt file is a powerful tool. By editing this file, you can dictate which pages and directories should be indexed by search engines and which ones should be excluded. This can have a significant impact on your website’s visibility in search engine results.

Rules and directives

There are several rules and directives that can be added to the robots.txt file to control search engine crawlers’ behavior. One common directive is the “Disallow” directive, which tells search engine crawlers not to index specific pages or directories. Another directive is the “Allow” directive, which specifies that certain user-agents have access to specific areas of the website. Additionally, the “Crawl-delay” directive can be used to specify how long search engine crawlers should wait between requests to your website.

Examples of common directives

One common use of the robots.txt file is to disallow certain pages or directories from being indexed by search engines. For example, you may want to prevent search engines from indexing pages that contain sensitive information or duplicate content. In this case, you would use the “Disallow” directive to specify which pages or directories should be excluded from indexing.

On the other hand, you may want to allow specific user-agents access to certain areas of your website. For example, you may want to give access to a specific search engine crawler that is used by a popular search engine. In this case, you would use the “Allow” directive to specify which user-agents have access to specific areas of your website.

These are just a few examples of the many ways in which the robots.txt file can be used to control search engine crawlers’ behavior. By understanding the different rules and directives that can be added to this file, you can effectively manage how your website is indexed by search engines.

Best practices for editing robots.txt in WordPress

When it comes to editing the robots.txt file in WordPress, it’s important to approach the task with caution and follow best practices to avoid inadvertently causing issues for your website. Here are some tips to help you navigate the process effectively.

Use caution when adding directives

When editing the robots.txt file, it’s crucial to exercise caution when adding directives. Adding the wrong directive can inadvertently block important pages or resources from being crawled by search engines, which can negatively impact your website’s visibility and performance. Before adding any new directives, carefully consider the potential implications and test thoroughly to ensure they have the intended effect.

Regularly review and update the robots.txt file

It’s essential to regularly review and update the robots.txt file to ensure it aligns with your website’s current structure and content. As your website evolves, new pages and resources may be added, and existing ones may be removed or relocated. Failing to update the robots.txt file accordingly can result in search engines being unable to access and index your website’s content properly, leading to decreased visibility in search results.

Test changes thoroughly

Before implementing any changes to the robots.txt file, it’s crucial to test them thoroughly to ensure they have the desired effect. Use tools like Google’s robots.txt Tester to check how search engines interpret your directives and ensure that important pages and resources are not inadvertently blocked. Additionally, monitor your website’s performance and search engine visibility after making changes to identify any potential issues that may have arisen.

Document your changes

Keeping a record of the changes you make to the robots.txt file can be invaluable for troubleshooting and maintaining transparency within your team. Documenting the date, nature, and purpose of each change can help you track the evolution of your directives and understand the reasoning behind specific decisions. This can be particularly useful when collaborating with other team members or when troubleshooting unexpected issues related to the robots.txt file.

How do I access and edit the robots.txt file in WordPress?

To access and edit the robots.txt file in WordPress, you can use a file manager plugin or access your website’s files through FTP. Once you have located the robots.txt file, you can edit it using a text editor or directly within the file manager plugin.

What should I include in my robots.txt file?

In your robots.txt file, you can include directives that instruct search engine crawlers on how to interact with your website. This can include specifying which areas of your site should be crawled and indexed, as well as any areas that should be excluded from crawling.

How do I test if my robots.txt file is working correctly?

You can test the functionality of your robots.txt file using the robots.txt tester tool in Google Search Console. This tool allows you to see how Google’s web crawlers interpret your robots.txt directives and identify any potential issues.

Can I use a plugin to edit my robots.txt file in WordPress?

Yes, there are several WordPress plugins available that allow you to edit your robots.txt file directly within the WordPress dashboard. These plugins can provide a user-friendly interface for making changes to your robots.txt directives.

What are some common mistakes to avoid when editing the robots.txt file?

Some common mistakes to avoid when editing the robots.txt file include blocking important areas of your website from being crawled, using incorrect syntax in the directives, and inadvertently blocking access to essential resources such as CSS and JavaScript files.